CNC Enclosure

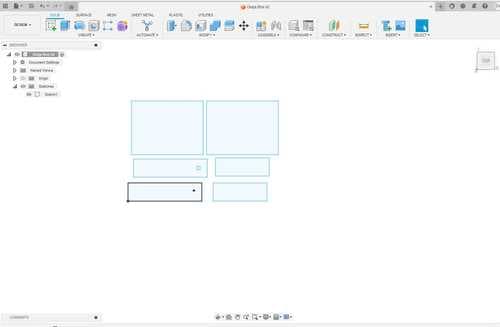

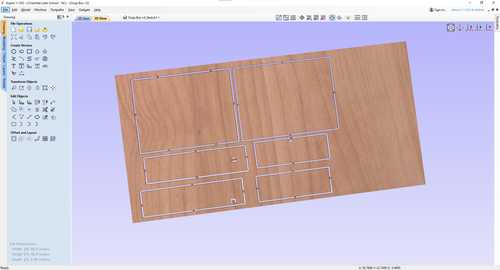

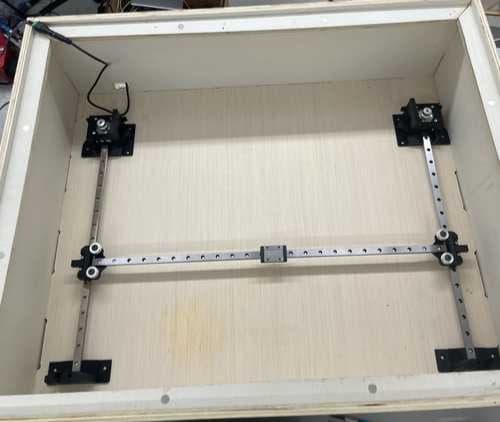

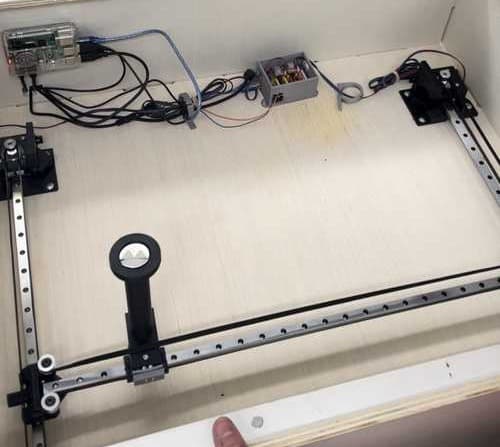

The enclosure was parameterized in Fusion 360 to balance portability with enough interior volume for the gantry and electronics. We iterated with laser-cut cardboard mockups before milling the final birch panels on a ShopBot.

- Finger joints with dogbones for a press-fit lid held in place by embedded magnets.

- Ports for USB access, microphone routing, and a removable planchette bay.

- Laser-engraved board artwork with a custom punctuation set.